Revolutionizing Chest X-Ray: A New Neural Network Model

Scientists have achieved a significant breakthrough in a pivotal report recently published in Radiology about New Neural Network Model. By joining clinical patient information with imaging information, they had the option to work on the analytic viability of chest X-beams essentially. The field of clinical imaging could go through an unrest because of this disclosure, which would likewise significantly improve patient consideration.

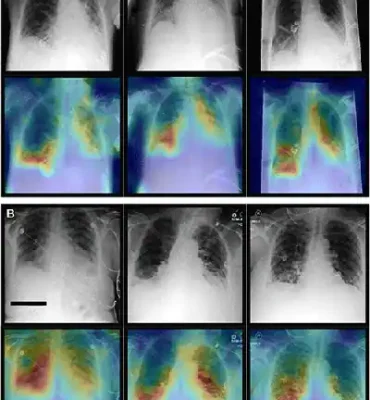

The presentation includes anteroposterior radiographs (top) and corresponding attention maps (bottom) taken with patients in a supine position.

A. The top row of images showcases the most critical diagnostic findings from our proprietary dataset. A 69-year-old female patient with blockage, pneumonic invades, and emission is shown on the left. In the center, a 64-year-old with similar symptoms, and on the right, a 49-year-old with cardiomegaly and pneumonic infiltrates.

B. The bottom row highlights significant diagnostic findings from the Medical Information Mart for Intensive Care dataset. A 48-year-old female patient with pneumonic penetrates in the lower right lung is shown in the center. On the left, a 58-year-old female patient with respective atelectasis and lower lung emanation. On the right, a 79-year-old male patient with cardiomegaly and pneumonic penetrates in the right lower lung were the three cases that were introduce

It’s essential to note that our attention maps consistently identify the most relevant regions within the images. For instance, when pneumonic opacities are present, these maps clearly indicate those areas in the lungs. Credit for these images goes to the Radiological Society of North America.

Processing Data

Model Trained on Imaging and Non-Imaging Data from 82K Patients

Khadar and his colleagues specifically developed a Transformer model for medical applications. They trained it on both imaging and non-imaging patient data, including information from over 82,000 patients.

Journal Reference:

Khader, F., et al. (2023) Multimodal Deep Learning for Integrating Chest Radiographs and Clinical Parameters: A Case for Transformers. Radiology. doi:10.1148/radiol.230806.

Source: https://www.rsna.org/